The field of artificial intelligence is undergoing a quiet revolution, one that looks increasingly like the human brain for inspiration. At the heart of this shift lies a groundbreaking innovation: neuromorphic "bionic chips" that promise to slash energy consumption by a staggering thousandfold compared to traditional computing architectures. These brain-inspired silicon marvels are not just incremental improvements – they represent a fundamental rethinking of how machines process information.

For decades, computer scientists have struggled with what's become known as the "von Neumann bottleneck." Traditional computers separate memory and processing units, forcing data to shuttle back and forth across this divide like commuters in rush hour traffic. The result? Massive energy waste and inherent speed limitations. Neuromorphic chips shatter this paradigm by mimicking the brain's architecture where memory and processing coexist in a dense web of synapses and neurons.

Researchers at leading institutions have made startling progress in developing these bio-inspired processors. One prototype chip containing just 100,000 artificial neurons – a fraction of the human brain's 86 billion – demonstrated the ability to perform complex pattern recognition tasks while consuming less power than a hearing aid battery. This efficiency stems from the chip's event-driven design; unlike conventional processors that constantly poll for data, neuromorphic chips remain dormant until receiving electrical pulses, much like biological neurons.

The implications of this energy efficiency breakthrough extend far beyond laboratory curiosities. Consider the environmental impact: data centers currently consume about 1% of global electricity, a figure projected to quadruple by 2030. Widespread adoption of neuromorphic computing could dramatically bend this curve downward. Early estimates suggest that replacing just 10% of traditional servers with brain-inspired chips could save the annual power consumption of a small European nation.

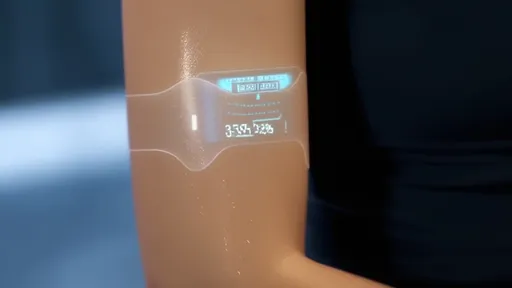

What makes these chips truly remarkable is their ability to learn and adapt. Unlike conventional AI that requires extensive pre-training on massive datasets, neuromorphic systems can adjust their synaptic weights in real-time through a process resembling biological learning. This capability opens doors to applications where energy constraints and adaptive intelligence collide – think autonomous drones that can patrol for days rather than hours, or medical implants that evolve with a patient's changing physiology.

The defense sector has taken particular notice of this technology. DARPA's recent investments in neuromorphic computing highlight its potential for creating ultra-efficient surveillance systems and battlefield decision-making tools that don't require constant cloud connectivity. Similarly, space agencies envision these low-power chips enabling long-duration interstellar missions where traditional computing would be prohibitively energy-intensive.

Yet significant challenges remain before neuromorphic chips enter mainstream use. The biggest hurdle isn't technical but conceptual – our entire software ecosystem is built around von Neumann architectures. Developing new programming paradigms for these brain-like processors represents perhaps the most profound shift in computing since the transition from vacuum tubes to transistors. Early adopters are creating specialized languages that treat spikes (the chip's version of neural impulses) as fundamental data types rather than the binary 1s and 0s of conventional computing.

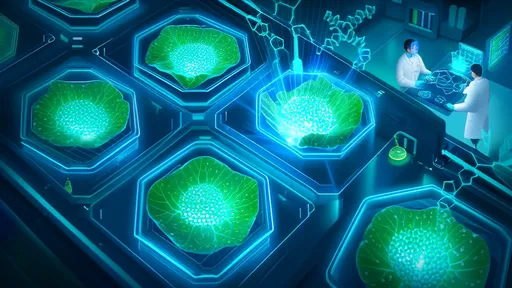

Commercialization efforts are already underway, with several startups and semiconductor giants racing to bring neuromorphic products to market. One company has developed a chip that processes visual information with 1,000 times greater energy efficiency than GPUs while achieving comparable accuracy in object recognition tasks. Another has created a system that learns Morse code patterns in real-time using power levels typically associated with digital watches rather than computers.

The human brain remains the gold standard for efficient computation – performing an exaflop's worth of calculations using merely 20 watts of power. While current neuromorphic chips still fall orders of magnitude short of this benchmark, their trajectory suggests we're entering an era where computing efficiency could improve exponentially rather than incrementally. As these bionic chips evolve, they may not just transform technology but redefine our very understanding of intelligence – both biological and artificial.

Looking ahead, the convergence of neuromorphic hardware with other breakthroughs like memristors (nanoscale devices that mimic synaptic plasticity) promises even greater leaps. Some researchers speculate that within a decade, we might see hybrid systems combining the precision of digital computing with the efficiency and adaptability of brain-inspired architectures. This fusion could birth an entirely new class of intelligent devices – ones that think like organisms while retaining the reliability of machines.

The neuromorphic revolution won't happen overnight, but its direction is clear. As climate concerns and the physical limits of traditional computing loom larger, the appeal of brain-like efficiency becomes irresistible. These bionic chips represent more than just better technology – they offer a sustainable path forward for an increasingly AI-driven world. The computers of tomorrow may not just be faster or smaller, but fundamentally different in kind, taking their cues from three pounds of gray matter that's been perfecting its design for millions of years.

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025

By /Aug 18, 2025